Should you take a multivitamin?

Page Contents:

Over half of American adults and 70 percent of the elderly take a vitamin or supplement, and research analysts predict that the global market for supplements will climb to $300 billion by 2024.

Multivitamins make up a significant chunk of this market. A 2006 National Institute of Health (NIH) conference revealed that 20-30% of Americans use a multivitamin daily, and many more Americans effectively take a multivitamin by eating fortified grain products, like Shredded Wheat cereal and Wonder Bread.

But is this a good idea? Are multivitamins really necessary? If so, who would benefit? And what should you look for in a multi? Those are exactly the questions I’ll answer in this article.

Why a healthy diet should always be the primary source of nutrients

There’s an idea that has become prevalent in some circles (especially in the Silicon Valley) that we don’t need food at all, and we can meet all of our nutrient needs with a “Soylent Green” type of fortified beverage.

Apart from how disgusting those beverages are and how much pleasure and joy we miss from eating real food, there’s no evidence that replacing food with synthetic nutrients is safe or beneficial—and plenty of reasons to think that it isn’t.

Humans are adapted to getting nutrients from whole foods. Most nutrients require enzymes, synergistic co-factors and organic mineral-activators to be properly absorbed. While these are naturally present in foods, they are often not included in synthetic vitamins with isolated nutrients.

In a paper published in the American Journal of Clinical Nutrition called Food Synergy: An Operational Concept For Understanding Nutrition emphasizing the importance of obtaining nutrients from whole foods, the authors concluded:

A person or animal eating a diet consisting solely of purified nutrients in their Dietary Reference Intake amounts, without benefit of the coordination inherent in food, may not thrive and probably would not have optimal health. This review argues for the primacy of food over supplements in meeting nutritional requirements of the population.

They cautioned against the risk of reductionist thinking, which is common in conventional medicine and nutritional supplementation. Instead, they urge us to consider the importance of what they call “food synergy”:

The concept of food synergy is based on the proposition that the interrelations between constituents in foods are significant. This significance is dependent on the balance between constituents within the food, how well the constituents survive digestion, and the extent to which they appear biologically active at the cellular level.

They go on to provide evidence that whole foods are typically more effective than supplements in meeting nutrient needs:

- Tomato consumption has a greater effect on human prostate tissue than an equivalent amount of lycopene.

- Whole pomegranates and broccoli had greater antiproliferative and in vitro chemical effects than did some of their individual constituents.

- Free radicals were reduced by consumption of brassica vegetables, independent of micronutrient mix.

This is why it’s so important to get as many nutrients as we can from food. A whole-foods diet will naturally provide a solid foundation of the many vitamins, minerals, and trace nutrients that we need to thrive, and these micronutrients work synergistically together to support our health.

And in a perfect world, we’d be able to meet all of our nutrient needs through food alone.

Sadly, that is not the world we live in.

Why supplementation may be necessary in the modern world

While our distant ancestors were able to get all of the nutrition they needed from their diet, there are several challenges that we face today that make this difficult. These include:

- Changes in soil quality, which reduce nutrient availability.

- Increases in chronic diseases, which increase the demand for nutrients and reduce their absorption.

- A shift to a global, rather than local, food system. Nutrient levels in produce begin to decline as soon as a food is harvested, so food that has been shipped for 3,000 miles (common today) has far lower nutrient levels than local food.

- A shift to industrialized agriculture. Organic produce, pasture-raised animal products, and wild-caught seafood generally have higher nutrient levels than conventional produce, CAFO meat, and farmed seafood.

- An increase in toxins like heavy metals and glyphosate in the food supply. These toxins bind to nutrients and decrease their bioavailability.

- An epidemic of chronic stress, which depletes nutrients and increases our demand for them.

- Over-the-counter and prescription medications (e.g. metformin) that deplete key nutrients and/or affect nutrient bioavailability.

- Greater numbers of people following restricted diets and doing intermittent fasting, both of which reduce nutrient intake.

The result of all of these changes is that most people in the industrialized world are not getting enough of not just one but several essential micronutrients.

How common are nutrient inadequacies?

When I say that “most people aren’t getting enough micronutrients”, I’m not talking about full-blown nutrient deficiencies that cause acute diseases like rickets, pellagra, and scurvy. Those are relatively rare in the modern world. I’m speaking of what scientists refer to as “nutrient inadequacies”, which means not getting the amount of a nutrient that we need to support optimal health and function.

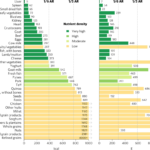

Nutrient inadequacies are shockingly common in industrialized countries like the United States. Consider the following U.S. statistics compiled by the Linus Pauling Institute:

– 100% don’t get enough potassium

– 94% don’t get enough vitamin D

– 92% don’t get enough choline

– 89% don’t get enough vitamin E

– 67% don’t get enough choline

– 52% don’t get enough magnesium

– 44% don’t get enough calcium

– 43% don’t get enough vitamin A

– 39% don’t get enough vitamin C

https://lpi.oregonstate.edu/mic/micronutrient-inadequacies/overview

As disturbing as these statistics are, they are almost certainly underestimated.

There are many reasons for this. First, the Recommended Dietary Allowance (RDA) for most nutrients is based on factors like average body weight. The average body weight of American men and women has changed dramatically over the past 25 years, but the RDAs have not been updated.

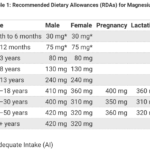

Magnesium is a prime example of this. The current RDA for magnesium is 320 mg for women and 420 mg for men. These RDAs were last published in 1997, using average body weights of 133 lbs for adult women and 166 lbs for adult men. Yet today, the average body weight is 168.5 lbs for women and 196 lbs for men—a significant increase in a short period of time.

In 2021, researchers published a study arguing that the RDAs for magnesium should be updated to reflect the increasing average body weight of the US population. They re-calculated the RDAs for magnesium according to the current average weights of male and female adults, and came up with the following values:

- Female adults: 467–534 mg/d

- Male adults: 575–657 mg/d

The average intake of magnesium for US adults is 340–344 mg/d for men and 256–273 mg/d for women.

This means that most Americans are not getting enough magnesium—even if we use the lower, outdated RDA values. And Americans are falling far short of the recommended magnesium intake if we use the more accurate, updated RDAs based on current average body weight.

In fact, the average male and female over 31 years of age are consuming 200–300 mg/d less magnesium per day than they need!

If we were to use these more accurate, updated RDA values for magnesium, it’s likely that closer to 100 percent of the population would fall into the “inadequate intake” category.

Magnesium is just one example. There are similar issues with the RDA or recommended intake for many other nutrients as well:

- The RDA for vitamin B12 needs to be 300-500% higher in order to reliably avoid signs and symptoms of B12 deficiency.

- The RDA for vitamin D, which is currently 600 IU, should be at least 1,000 IU to lower the risk of vitamin D deficiency.

- The Linus Pauling Institute has argued that the RDA for vitamin C should be increased by 200% (from 100 to 200 mg).

There are several other reasons that using the RDA as the threshold for nutrient sufficiency is problematic.

First, the RDA was originally created during World War II to create nutritious rations for soldiers so that they didn’t develop acute diseases of malnutrition like scurvy, rickets, or pellagra. It is not the amount needed for optimal health and function—which is likely higher, as the examples I provided above indicate.

Second, the RDA does not take health status into account. We now know that people with chronic diseases like obesity, diabetes, hypothyroidism, etc. have a greater need for certain nutrients. For example, the bioavailability of vitamin D from sunlight and food is lower in obese individuals than in lean individuals. This is not reflected in the current RDAs.

Third, the RDA doesn’t consider the importance of nutrient synergy. Nutrients aren’t isolated from each other in our bodies. Instead, they have complex and often synergistic relationships. Magnesium is required for the absorption and activation of vitamin D. Copper is required for the absorption of iron. Vitamin K2 regulates calcium metabolism, ensuring it ends up in the bones and teeth (where it belongs) and stays out of the soft tissues like the blood vessels and kidneys (where it doesn’t belong). Even if you are meeting the RDA for a particular nutrient, like vitamin D, if you’re not getting enough of other nutrients that vitamin D requires for normal function, you will suffer from vitamin D deficiency.

Fourth, the RDA doesn’t consider the bioavailability or form of nutrient that is consumed. Beta-carotene, a vitamin A precursor, is lumped together with retinol, the active form of vitamin A—despite research indicating that some people are poor converters of carotenes to retinol. The bioavailability of calcium from spinach is only 5 percent. Of the 115 mg of calcium present in a serving of spinach, only 6 mg is absorbed. This means that the actual contribution of spinach toward the RDA for calcium is far lower than food labels would suggest.

The inescapable conclusion is that far more people are suffering from nutrient inadequacies than the statistics above from the Linus Pauling Institute suggest. Given that those numbers already indicate that the majority of Americans are not getting enough of multiple essential nutrients, does this mean that virtually all of us are falling short on several micronutrients?

That very well may be the case.

Why nutrient inadequacy may be a bigger problem than we think

Historically, micronutrients were thought of as compounds crucial for survival or protection against severe ill health. Now, we are beginning to realize the critical but unappreciated role they play in optimal function, aging, and longevity.

We need about 40 micronutrients to function optimally. When we don’t get enough of even just a few of these, our bodies start to break down—and our lifespan shortens.

Dr. Bruce Ames, a Biochemistry and Molecular Biology professor at UC Berkeley, has developed a hypothesis for why this happens, called Triage Theory.

He proposes that all proteins and enzymes in the body be classified into two categories: survival proteins and longevity proteins.

Survival proteins are those that we need for immediate, short-term survival. Longevity proteins are those that contribute to longer-term health and well-being.

For example, Vitamin K-dependent proteins could be categorized into those required for short-term survival—primarily blood-clotting functions—and those involved in long-term health—regulating calcium metabolism and supporting cellular health.

Triage Theory holds that modest deficiency of even a single nutrient triggers a built-in rationing mechanism that favors the proteins needed for immediate survival and reproduction (survival proteins) while sacrificing those needed to protect against future damage (longevity proteins).

This is true because survival and longevity proteins often require the same vitamins, minerals, and other nutrients to function properly. If there’s a shortage of a particular nutrient, the body will always prioritize what’s needed for short-term survival. That’s an evolutionary imperative for us to be able to pass on our genes. Evolution is nothing if not efficient!

This is a dramatic shift in how scientists are now thinking about the role of nutrients in human health.

If Ames’ Triage Theory is true—and there’s certainly a lot of supporting evidence—it would explain why nutrient inadequacies that aren’t enough to cause overt clinical symptoms still contribute significantly to the aging process and the diseases of aging.

Why optimizing nutrient status is so difficult

But how do you know if you’re not getting the optimal amount of a nutrient and you’ve developed a “nutrient inadequacy?”

Unfortunately, this is difficult to determine. With a full-blown nutrient deficiency, you would almost certainly develop the characteristic signs and symptoms that go along with a very low intake of that nutrient.

But with nutrient inadequacy, you may not develop any symptoms in the short term. If you do, they’ll likely be non-specific symptoms like low energy, brain fog, poor sleep, and digestive or skin issue—exactly the type of mild symptoms that almost everyone today experiences to some degree.

It’s also unlikely that your doctor or healthcare provider will be of much help here. Testing for nutrient status is notoriously difficult and complex.

I know this firsthand. I’ve tested virtually all of my patients for nutrient status over my 15-year career as a Functional Medicine clinician, and I’ve also trained several thousand healthcare professionals on how to do it.

It’s extremely difficult to get a clear picture of what’s going on without running hundreds or even thousands of dollars of tests because each different nutrients require different methods to be detected accurately.

For example, 99.5% of magnesium in our bodies is stored in the tissues. So, if we try to measure it in the serum or even inside of the red blood cell—which are the two commonly available ways of doing it—we’re not going to get a true picture of magnesium status.

There’s a similar problem with calcium. It has to be maintained within a very tight range in our blood, so if dietary intake of calcium falls, the body will remove calcium from the bone just to maintain the normal range in the blood. This means that even when someone is not consuming enough calcium in their diet, the blood test will be normal.

It’s even worse for vitamin K2: we don’t have a way of measuring it in any body fluid or tissue.

I could go on, but you get the idea: most people that aren’t getting the optimal amount of nutrients in their diet don’t have a clear way of knowing that they’re coming up short.

What does this mean for those of us that are interested in optimizing our healthspan?

Well, if we want to optimize our health in the short term and live a long, disease-free life, we need to maximize our intake of the 40 micronutrients that our bodies need.

I’m not talking about just getting the amount of a nutrient that is required to avoid acute disease. I’m talking about getting the amount needed to avoid Triage, where our bodies prioritize short-term needs and sacrifice long-term needs.

The role of supplements in meeting nutrient needs

Given the sky-high rates of nutrient inadequacy, the difficulty of meeting our nutrient needs exclusively from food, and the potentially serious consequences of suboptimal intake of essential micronutrients, it should be clear that supplementation has an important role to play.

Yet I still frequently see headlines in the mainstream like “supplements don’t work” or “don’t waste your money on supplements”

These are ridiculous claims. It’s like saying “food is healthy” or “medications are effective”. Which foods? Which medications? We understand that there are huge differences between one food or one medication and another, yet journalists (and even scientists) tend to group supplements and multivitamins into one broad category.

The truth is that there is massive variation in the quality and thus efficacy of nutritional supplements. And research shows that they can be incredibly effective—when they are well-designed and properly administered. For example:

I could go on… and on…. and on. There are literally thousands of studies published in the scientific literature supporting the efficacy of supplements when used appropriately.

But it’s not all roses, of course. There are also examples of supplements causing harm. Some nutrients can be toxic when taken at high doses and/or over a long period of time, especially in synthetic forms that aren’t found in a natural diet.

A prime example of this is the now infamous JAMA meta-analysis on antioxidants. They looked at 68 trials with over 230,000 participants. They found that long-term supplementation with high doses of beta carotene (a vitamin A precursor) and alpha-tocopherol (the most common form of vitamin E) may increase the risk of heart disease and early death.

Another example is calcium supplementation. A meta-analysis of studies involving more than 12,000 people also published in the BMJ found that calcium supplementation increases the risk of heart attack by 31 percent, stroke by 20 percent, and death from all causes by 5 percent. (Check out my article Why You Should Think Twice About Taking Calcium Supplements for more on this.)

The message here is clear: supplements should be evaluated individually based on numerous factors, including:

- What condition they are being taken for

- The form and bioavailability of the nutrient in the product

- The dosage being taken

- The quality of the ingredients (and whether the product contains impurities)

- The interaction between other nutrients that play a synergistic role

- And more…

Can multivitamins help? And what to look for if you take one.

Early in my career, I did not typically advise my patients to take a multivitamin. I believed that it was preferable to meet our nutrient needs from food, and I encouraged them to make their diet as nutrient-dense as possible in order to do that.

But I have a different perspective now. I’ve learned from the scientific literature how common nutrient inadequacy is and how serious the consequences can be. And I’ve seen these impacts firsthand in my work with patients over the last 15 years.

I still think that a whole foods diet should always form the foundation of our nutrient intake. But I now believe that a multivitamin can serve as an “insurance policy” to ensure that we’re getting the optimal amount of nutrients we need—not just to avoid disease—but to thrive and flourish in the modern world.

That said, it’s critical to realize that not all multivitamins are created equal.

If you just walk into the local grocery or drug store and pull something off the shelf, chances are that it won’t help very much—and it may even cause harm.

Why? Because many common multivitamins suffer from three problems:

- They don’t have enough of the nutrients you need

- They don’t have the right form of each nutrient

- They have too much of certain nutrients

Let’s look at each of these issues in turn.

Not enough of the nutrients you need

As I noted earlier in the article, the RDA for many nutrients is out of date and significantly lower than it should be. Many multivitamins are designed with the RDA in mind, which means that they don’t provide enough of nutrients like vitamin C, vitamin D, vitamin B12, and magnesium.

The gaps between what people need and what is provided can be enormous. For example, many multis provide 400 IU of vitamin D, whereas most scientists now believe that 1,000 IU should be the minimum (and many suggest 2,000 IU would be better).

The wrong forms of some nutrients

Most multivitamins are made with cheap, synthetic ingredients that aren’t well-absorbed and don’t mimic the nutrients we get from food.

Folic acid is a good example. Folic acid is an oxidized synthetic compound used in some supplements and food fortification, whereas folate is the form of vitamin B9 naturally found in food. Unlike natural folates, folic acid is poorly absorbed and may end up unmetabolized in the bloodstream, where it can increase the risk of cancer (in some people).

Another example is vitamin B12. Many supplements contain cyanocobalamin, a less active form of B12. While cyanocobalamin can be converted into the more active forms of B12 (methylcobalamin and adenosylcobalamin), that conversion is inefficient in many people. Many studies have shown that using hydroxycobalamin is superior to cyanocobalamin, and methylcobalamin is superior to both.

Supplementation with alpha-tocopherol, the common form of vitamin E that is used in many multivitamins and supplements, has been associated with a higher risk of cancer. This effect is not observed with dietary vitamin E or with supplementation with tocotrienols, a more recently discovered form of vitamin E.

A multivitamin should contain food-based, naturally occurring, or bioidentical ingredients—so it can build upon the nutrition we get from a whole-foods diet.

Too much of certain nutrients

Given the extremely high rates of nutrient inadequacy, it might seem strange for me to claim that some multivitamins have too much of certain nutrients. But some nutrients can be toxic at high doses, and more is not always better.

Iron is a prime example. While iron deficiency is a much more common problem, iron overload is a serious and often unrecognized issue that affects as many as 1 in 200 people in North America. High doses of iron in supplements and multivitamins may contribute to iron overload in these people—and they often remain undiagnosed and unaware of their condition.

I already mentioned the problem with high-dose calcium supplementation earlier in this article. It can increase the risk of heart attack, stroke, and death from all causes.

Iodine supplementation at high doses can also be harmful. Iodine was added to salt in the U.S. and other countries around the world because of high rates of iodine deficiency. This was helpful and necessary. But excess iodine intake can be problematic. A study of about 250 participants with normal thyroid function found that supplemental doses of iodine greater than 400 micrograms caused subclinical hypothyroidism.

With all of this in mind, here’s what you should look for in a multivitamin:

- It should feature food-based, naturally occurring, or bioidentical ingredients

- It should contain the most active and effective forms of each nutrient

- It should have adequate doses of nutrients that we fall short of, like vitamin D and magnesium

- It should not have high doses of nutrients that can be toxic, like iron, iodine, calcium, or alpha-tocopherol

I hope this article cleared up the confusion about multivitamins and helps you to make an informed decision about whether to take one.